Understanding how information is transmitted and transformed within LLM-based multi-agent systems is crucial for effective collaborative AI. This project investigates the "telephone game" phenomenon in a tree-structured agent network using paraphrasing models to simulate message propagation. Our analysis reveals significant message degradation through semantic similarity metrics (ROUGE & cosine similarity), with information loss increasing at deeper tree levels.

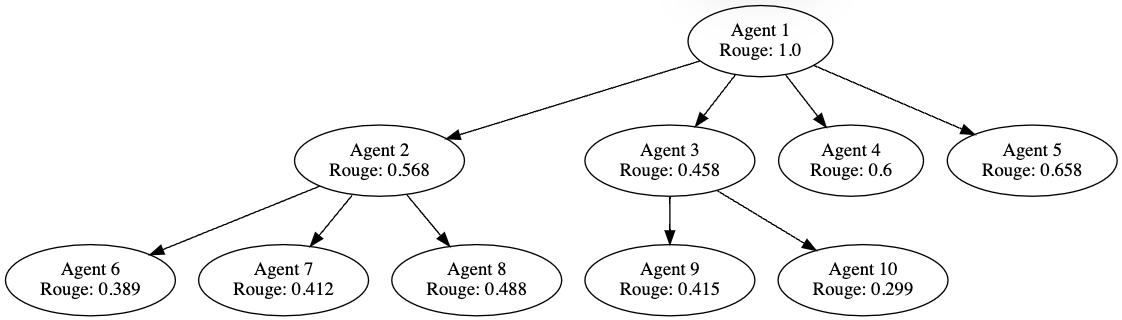

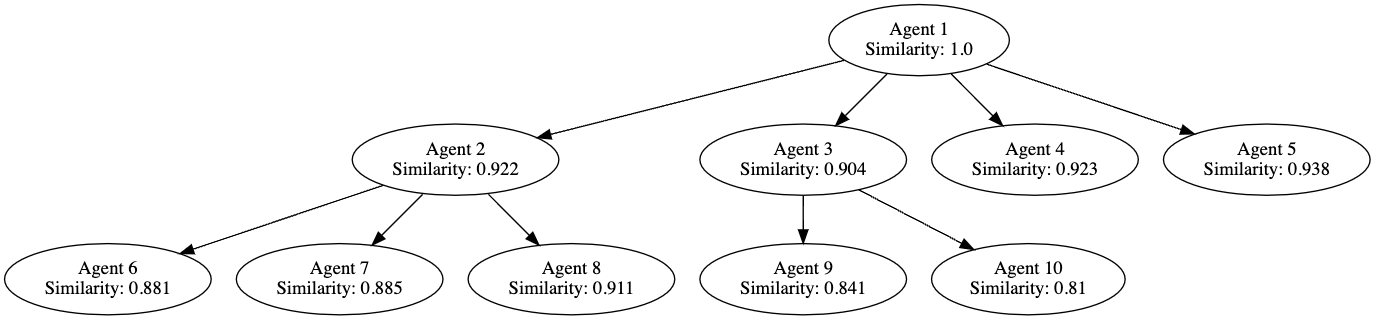

A visualization of our agent communication tree showing how information transforms as it propagates from the root agent to leaf agents. The graphs reveal decreasing ROUGE scores (information preservation) at each level of propagation as well as decreasing cosine similarity scores (semantic preservation) at each level of propagation.

Our experiment demonstrates how an initially clear instruction becomes increasingly distorted as it's passed between agents using paraphrasing LLMs. Original recipe instructions about lime juice preparation evolve to include non-existent ingredients and procedures by the third generation of message transmission.

What did you try to do? What problem did you try to solve? Articulate your objectives using absolutely no jargon.

Throughout history, human communication demonstrates that information rarely remains unchanged as it passes from person to person. Stories, legends, and historical events evolve over time, shaped by biases, interpretations, and conflicting memories of those who transmit them. The legend of King Arthur illustrates this phenomenon, as it has been told and adapted by many authors throughout centuries. We investigate whether large language models (LLMs) exhibit similar behaviors in information transmission. Using a simulated "telephone game" experiment with multiple successors rather than linear chains, we aim to understand how messages transform as they propagate through a network of AI agents, similar to information spread in human populations.

How is it done today, and what are the limits of current practice?

While extensive research has explored the behavior of individual LLMs, studies on their interaction are relatively limited. Existing research on the telephone game with LLMs primarily focuses on linear chains of transmission. For example, one study by Perez et al. (2024) tracked how text properties like toxicity, positivity, difficulty, and length evolve through iterated interactions between LLMs. Another multilingual study by Mohamed et al. (2025) demonstrated that distortion increases with time and complexity but can be mitigated with strategic prompting. However, these studies are limited to linear chains, which don't accurately reflect how information spreads in real-world populations or collaborative multi-agent systems.

Who cares? If you are successful, what difference will it make?

Understanding information transmission between LLMs has critical implications for collaborative AI systems, particularly in applications like swarm robotics. Imagine a swarm of robots collaborating on a common task, where only one robot receives the initial instructions. If each robot has its own LLM, the message must propagate accurately through the swarm. Any distortion could cause robots to pursue different objectives, compromising collaborative efficiency. Our research not only enhances understanding of LLMs' potential for cumulative cultural evolution and their vulnerability to biases but also provides key insights for designing reliable AI communication systems where message integrity is essential for coordinated action. The findings could inform better agent communication protocols, more robust information sharing mechanisms, and improved task delegation in complex AI systems.

What did you do exactly? How did you solve the problem? Why did you think it would be successful? Is anything new in your approach?

TODO

To measure information degradation, we applied two key metrics:

What problems did you anticipate? What problems did you encounter? Did the very first thing you tried work?

TODO

How did you measure success? What experiments were used? What were the results, both quantitative and qualitative? Did you succeed? Did you fail? Why?

TODOWe measured information preservation through two metrics: ROUGE-1 scores (measuring lexical overlap) and cosine similarity (measuring semantic similarity). Our experiment with a 10-agent tree revealed several key findings:

| Agent | Generation | ROUGE-1 Score | Cosine Similarity |

|---|---|---|---|

| 1 (Root) | 0 | 1.0 | 1.0 |

| First-level (2-5) | 1 | 0.45-0.65 | 0.90-0.94 |

| Second-level (6-10) | 2 | 0.30-0.49 | 0.81-0.91 |

TODO